Responsible AI in Learning: A Student-Informed Approach

Biography

Dr Wennie Subramonian and Dr Maryam Malekshahian are Senior Lecturers (Teaching and Scholarship) in the Department of Chemical Engineering. Wennie also contributes as the Department’s Deputy Admissions and Outreach Lead. Her work focuses on enhancing inclusive student learning through the responsible use of AI in both classroom and lab settings, as well as in outreach projects. She leads student partnership projects that embed student voice in shaping inclusive, effective teaching and learning practices.

Maryam is the department’s Equality, Diversity, Inclusion, and Accessibility (EDIA) Lead and former Academic Employability Lead. Her current work explores how AI can be used thoughtfully and equitably in shaping an inclusive engineering education.

At the recent UoM Teaching and Learning Conference 2025, they invited colleagues to consider how students can move from passive users to co-creators of responsible AI practices.

What motivates us to explore this area?

Our work is driven by a notable gap in ethical guidance for students using AI in higher education. To date, only one ethical framework has been identified for higher education and it is primarily tailored to educators, not students. Developed by the Asian University for Women and the International University of Bangladesh, this framework does not sufficiently address the needs or perspectives of students as AI users. Moreover, there is limited evaluation of how AI-supported learning activities influence student learning outcomes in Engineering. In the past two years, only six such studies have been published, highlighting the need for further exploration in this area.

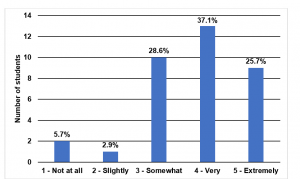

Our departmental student voice also revealed key concerns. In a recent classroom study, 94% of Chemical Engineering students (n=35) expressed worry about becoming over-reliant on AI tools, highlighting the importance of equipping students with the skills to use AI critically and responsibly.

Figure 1: Main concerns of students using AI (n=35).

Figure 1: Main concerns of students using AI (n=35).

Student Co-Creation in Action

Our project, co-led with MEng student Mr Ahnaf Saumik, began with a core question: How can students be supported to use AI critically and responsibly in their learning?

Together, we co-developed a concise two-page guide and classroom learning activities focused on responsible AI use, addressing academic integrity, data privacy, and bias. One key activity involved the Bhopal disaster as a Year 3 Chemical Engineering case-based learning, where students used AI tools to support their reflection on engineering ethics, safety, and problem-solving.

This partnership placed students not only at the centre of the design but at the centre of the conversation, ensuring the language, concerns, and practices in the resources were truly student-relevant.

What our students say about the AI Responsible Guide and Learning Activity

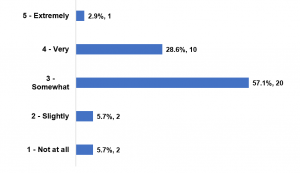

Over 91% of students found the Responsible AI learning activity useful, with 62% rating it as very to extremely useful (Figure 2). Students particularly valued the ethical guidance provided, commenting that “the ethical points were helpful,” and praised the resources as “really good and concise.” Some students suggested future improvements, such as incorporating “more techniques” to support AI use in learning.

However, only 32% of students reported feeling confident in the AI-generated responses when completing the learning activity (Figure 3). This highlights a continued need for academic support in helping students critically evaluate and apply AI outputs, particularly in problem-solving contexts.

Figure 2: Effectiveness of the learning activity in applying the guides information (n=35).

Figure 2: Effectiveness of the learning activity in applying the guides information (n=35).

Figure 3: Students rank their confidence in the accuracy of AI outputs for the learning activity (n=35).

Wicked Problem Set Workshop: Moving from Tools to Staff reflection

In our workshop, participants consisting of academics and professional service staff explored the student-designed guide and learning activity, and reflected on its relevance for teaching. Instead of completing the learning activity, they focused on what AI raises in terms of assumptions, risks, and opportunities in ethically complex tasks. We then moved into a wider discussion: How can educators foster critical, student-informed AI use across disciplines? What support do staff need to confidently and responsibly embed AI into their practice?

Key Insights from the Room

We invited participants to complete a short survey to help shape future support initiatives. Some highlights:

- 89% found the session useful or very useful in helping them reflect on AI in their teaching.

- 78% said the student-designed guide and activity gave them new ideas for their own practice.

- Participants valued practical student-facing tools, clear guidance on academic integrity, and hands-on learning design using AI.

Preferred formats for further support included in-person workshops, online toolkits, and short blog case studies.

What our student co-creator says

Mr Ahnaf Saumik: “Important that students have a say in potential tools they’ll be using in the future in both education and work.”

“As a student, my experience and perspective was used to make the guide and activity. Notably, this meant the language and activity was more accessible and relevant to current concerns amongst students with regards to using AI responsibly compared to existing guides and research which are primarily made for educators.”

Concluding remarks

AI is already integrated into many aspects of student learning, from research to problem solving. However, its responsible use must be supported and guided rather than assumed. Students can be influential co-creators in shaping how AI is used meaningfully in education. Their perspectives ensure that AI learning practices are practical, relevant, and aligned with real learning needs. Tools informed by students, such as concise guides and structured activities, can promote critical engagement and build confidence in using AI responsibly. Teaching with AI requires more than technical skills; it involves critical reflection on academic integrity, bias, and data privacy.

Looking Ahead: Building a Culture of Responsible Use

This work continues to evolve. Insights from the workshop discussions and survey responses will guide future developments, including an ITL workshop series and staff-focused resources on student-led approaches to using AI in teaching and learning. We believe that responsible use of AI is not a checklist, but a mindset. When students are empowered as partners, they can play a leading role in developing thoughtful, ethical, and effective practices in higher education.

Get involved

If you’re interested in co-developing AI-in-learning resources, sharing your own case study, or exploring student partnerships in teaching innovation, we’d love to hear from you.

wennie.subramonian@manchester.ac.uk

maryam.malekshahian@manchester.ac.uk

(MEng in Chemical Engineering graduate of July 2025)

Further Reading:

Student-facing

- Artificial Intelligence: Using AI in your learning (UoM Library)

General

- International Center for Academic Integrity

- Russell Group Principles on the use of generative AI tools in education

- Quality Assurance Agency: how can generative AI be using in learning and teaching?

0 Comments