Designing Better Assessments through Innovative Testing Methodology

David Schultz is Professor of Synoptic Meteorology within the Department of Earth and Environmental Sciences. He is the author of the award-winning Eloquent Science: A Practical Guide to Becoming a Better Writer, Speaker, and Atmospheric Scientist and has published over 180 peer-reviewed journal articles on meteorological research, weather forecasting, scientific publishing, and education. David is a Senior Fellow of the Higher Education Academy (SFHEA) and twice winner of the University Teaching Excellence Award.

David Schultz is Professor of Synoptic Meteorology within the Department of Earth and Environmental Sciences. He is the author of the award-winning Eloquent Science: A Practical Guide to Becoming a Better Writer, Speaker, and Atmospheric Scientist and has published over 180 peer-reviewed journal articles on meteorological research, weather forecasting, scientific publishing, and education. David is a Senior Fellow of the Higher Education Academy (SFHEA) and twice winner of the University Teaching Excellence Award.

In this post he talks about what prompted him to propose his ITL Fellowship Project (2021/22), and the key takeaways.

Your ITL Fellowship project focused primarily on designing better assessment questions. Can you set the scene for us on why you chose this as your focus?

The project came about because of the perpetually low scores for assessment and feedback in the NSS (National Student Survey). I always seek feedback from my students beyond the University’s standard UEQs (unit evaluation questions) because I want specific information about my specific assignments, to make them better. Those surveys told me that the students appreciated my feedback that I gave them on their assignments, so I knew I must be doing something right.

Also, I came to this country in 2009/2010 from the USA where we didn’t have this tradition of sitting final exams several weeks after the course is over to the same degree as here in the UK. In my experience, there was more opportunity for feedback between the instructor and the students in the USA. So, I think that has influenced my approach to assessment in general.

When my co-instructor Rhodri (Jerrett) and I were tasked with creating a 100-point multiple-choice question final exam for the course that we taught, we agreed we wanted to do something different within this format.

The Director of our Institute of Teaching and Learning loves a BOGOF (Buy One, Get One Free)! Your project exemplifies this perfectly. Can you explain?

Well if our assessment format was going to be multiple-choice, we wanted to include more application-type questions. My pitch when bidding for a fellowship was that these kinds of questions serve two key benefits:

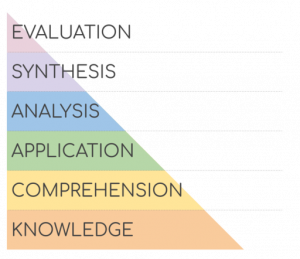

- Higher-level thinking skills: It raises where we are hitting on Bloom’s taxonomy to the ‘application and evaluation and synthesis and creation of new knowledge’ level rather than the questions testing simply ‘memorization and understanding’, which lie at the bottom of the pyramid. So that was certainly a goal. If we couldn’t ask essay questions or do other kinds of assessments, then then we could at least get the students thinking along those lines through these multiple-choice questions that we devised.

- Assessment security: The second issue was to ensure that we kept the test as fair as possible. We knew that, because typical multiple-choice questions are pretty straightforward, it’s possible for a student to just look up answers. We knew that during 2020/21 students were going to be taking the test at home, so it was important to us that that we design it so that a student couldn’t cheat on it. And that even if a student was tempted to just look up an answer, it would be hard to find answers for our questions online. That’s why the title of my project was ‘How to design cheat-proof multiple-choice questions’.

Fig. 1 Bloom’s taxonomy

Why assess by means of a multiple-choice exam? I’m guessing that you would ideally have chosen a different assessment type, but there are pros as well as cons to multiple-choice questions… Can you give us a sort of defence of the multiple-choice questions?

I know that multiple-choice questions are controversial, but I think there probably there are pros as well as cons to them. I don’t have anything in principle against multiple-choice questions as part of a balanced diet:

- First of all, some students do really well on multiple choice and not so well in essays, so they should have a chance to excel in that.

- Sometimes I just want to check that the students do actually understand a definition, or can identify something on a map or whatever—it demonstrates functional knowledge.

- It also serves the purpose of getting the student into the test. They’re feeling nervous, anxious, and if you ask them to solve the mysteries of life in the first essay question they’re not going to do very well! So, give them give something that you hope they can answer because it’s straight out of the book, or the lecture material, or you’ve emphasized it repeatedly. So what if they get 20 or 30 ‘free’ points? They’re then into the test, they feel comfortable, and so that’s when the harder questions can hit. At least they go away thinking, “Oh, I think I did some things right” rather than, “I got 0 on that test”.

- They’re also possible to do online, which was important during the COVID-19 pandemic (and has always been relevant for distance-learning courses).

- Of course, the fact that a test with only multiple-choice questions is easy to mark, and can now be automated, is part of the story.

Specific to my project, other advantages of including multiple-choice questions that test higher-level thinking skills include:

- There’s no better way to prepare students for graduate employment than to get them used to thinking about these kinds of ‘authentic assessment’ questions. The higher you go up in Bloom’s taxonomy the more the students are taking that knowledge that they were supposed to gain in the course and applying it outside of the narrow context that it was presented in a one-hour lecture. And that’s what we do in our real life—applying the rules to new contexts or data or whatever. I think it’s just so interesting to see that you can combine that level of complexity with multiple-choice questions.

- The student experience of assessment, in the assessment itself, is better. Maybe it’s because I’ve been designing questions and tests and assessments and teaching for so long, but I just think some tests that I see look so ordinary and so boring to take. Now maybe the students think that because we’re pushing up their levels of critical evaluation they can say, “Well, I didn’t see this stuff in class”, and perhaps that’s true—they didn’t see it exactly as it was presented. But certainly, the scenarios that we would have talked about in lecture are similar to the things that we were expecting them to learn.

- Plus, it helps to really stretch the best students, and so your mark distribution spreads out more, and allows better discrimination among the different grades.

What are the key take-aways from your project?

The key message is, really, if you’re going to design multiple-choice questions, then you don’t have to ask simple knowledge/regurgitation-type questions. You can pose real-life questions that students might encounter in their jobs, or a hypothetical scenario, or whatever. People would think that multiple-choice questions and these sort of critical thinking skills were worlds apart. But you can do it. It just takes some creativity, and you have to be open to the idea.

What’s next? Are you planning a follow-up workshop to the one you gave back in April 2022?

Yes, I’m liaising with the ITL to run a follow-up workshop this semester. There was so much discussion in the last workshop that we didn’t get to the second part of my original plan for the session, which was having the attendees design their own questions. The idea was that people would throw some questions up on the screen and then get feedback from the group. Now that we’re not constrained to sitting in the Zoom room, I’d really like to pull everyone from all these different disciplines across the University into a classroom, workshop some possible multiple-choice questions, and just let people’s imaginations go wild. That mix that would really inspire me. I’m sure we would learn a lot.

Further information

- Designing Cheat-proof multiple choice exams to improve higher-level thinking skills (narrated video presentation)

- How to design cheat-proof multiple-choice exams to improve higher-level thinking skills (pdf powerpoint slides)

0 Comments